Queue delay affecting CDN purges and emails

Postmortem

Summary

PyPI initially experienced delays in features which used a centralized task queue (such as purging the CDN cache and sending emails) due to a poorly performing task type. This was followed by an outage due to the combination of the steps taken to mitigate the queue issue and unrelated changes that affected PyPI’s performance, resulting in PyPI becoming unavailable via the web or for installations.

Details

On Thu, 23 Jun 2022 at 04:55 UTC, PyPI admins deployed a change which introduced a new periodic task designed to aggregate statistics about 2FA usage on PyPI. This task was designed to run every 5 minutes and sampled multiple counts of different characteristics of 2FA usage.

This task used a database query that attempted to count a large number of rows, and had poor performance, causing high database utilization and a long processing time for the task. However, while long-running, the task was finishing and publishing metrics, and so PyPI admins were unaware of its poor performance. Over time, however, the tasks that workers were actively working on began to include more and more of these poorly-performing tasks, exhausting the number of available workers to process other tasks.

On Sun, 26 Jun 2022 at 17:29 UTC, PyPI admins deployed an unrelated change which replaced the JSON serializer used by PyPI from the standard library JSON serializer to a third-party JSON serializer, with benchmarks indicating that this would result in a 6x savings on time to serialize JSON, and thus a significant savings on response time for PyPI’s various JSON-rendering endpoints.

At 21:06 UTC, PyPI admins began to receive reports that newly published versions were not being immediately updated in the web frontend for PyPI, not installable, and that emails were delayed. However, because the queue backlog was sufficiently small at this point, this small number of early reports were resolved within an hour and attributed to transient errors.

Over the course of the following 12 hours, which coincided with the US EST evening, night and early morning, PyPI admins received an increasing stream of user reports that emails were delayed and project pages were not updating.

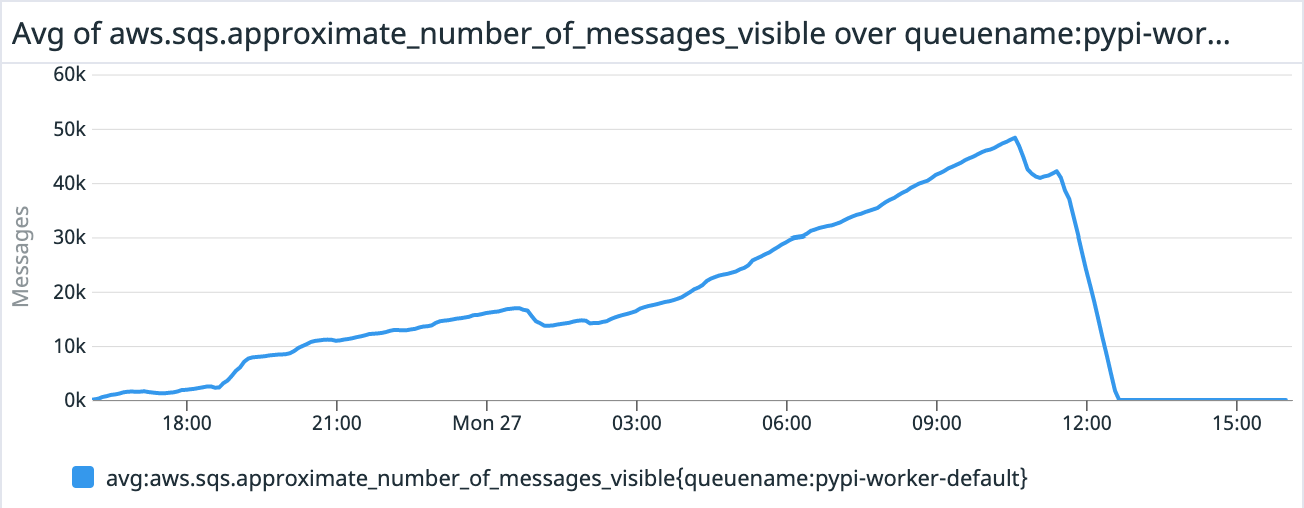

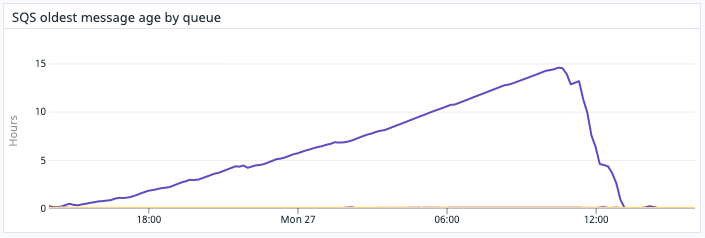

On Mon, 27 Jun 2022 at 15:43 UTC, PyPI admins determined that PyPI’s task queue had a significant backlog, with more than 46K pending tasks, with the oldest task being more than 14 hours old, and began attempting to mitigate the backlog.

PyPI admins determined that the backlog correlated with an increase in CPU usage by the database, and that there were at least two poorly-performing queries: one which had been introduced in the task for the 2FA metrics, and another which was due to a historical implementation detail of how PyPI projects are modeled in the database, where normalized project names are not stored in the database, but generated upon request based on the un-normalized project name

To mitigate the load on the database, PyPI admins immediately began canceling the long-running database queries that resulted from the poorly performing task, in an attempt to remove as many as possible from the queue and free up workers to complete other tasks. Simultaneously, admins prepared two separate changes to mitigate the two poorly-performing queries: one to temporarily disable the poorly performing metrics task, and a second to add a column in the database which stored the normalized project name.

At 15:53 UTC, PyPI admins deployed the first change that disabled the 2FA metrics task, which resulted in stopping additional long-running queries from being created. Admins continued to manually reap the poorly performing query as PyPI’s workers began to drain the backlog of other tasks. At this time, the size of the queue began to decrease.

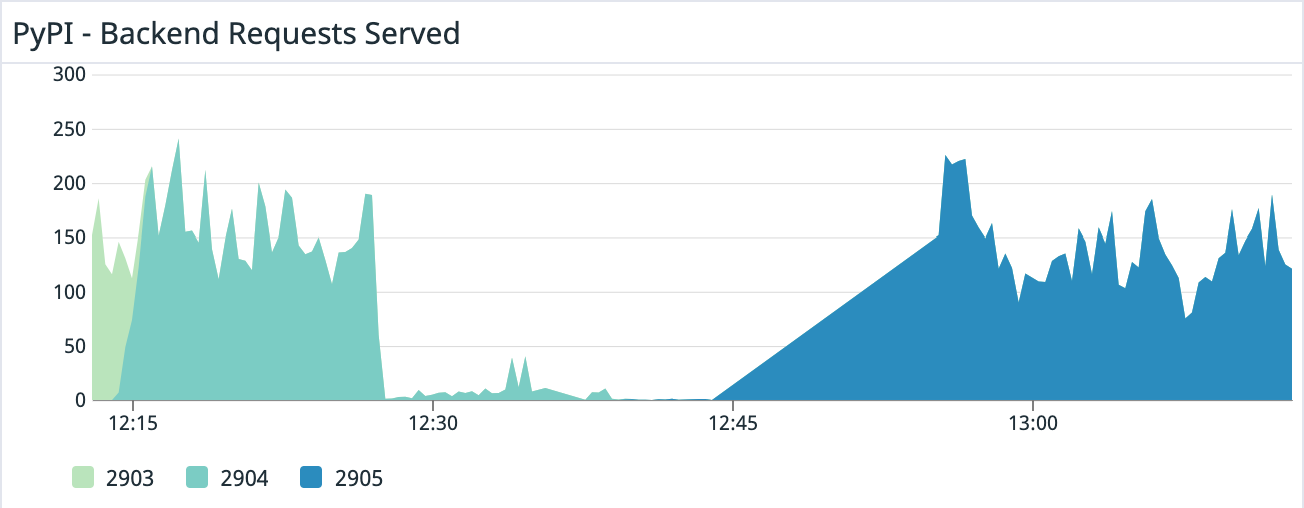

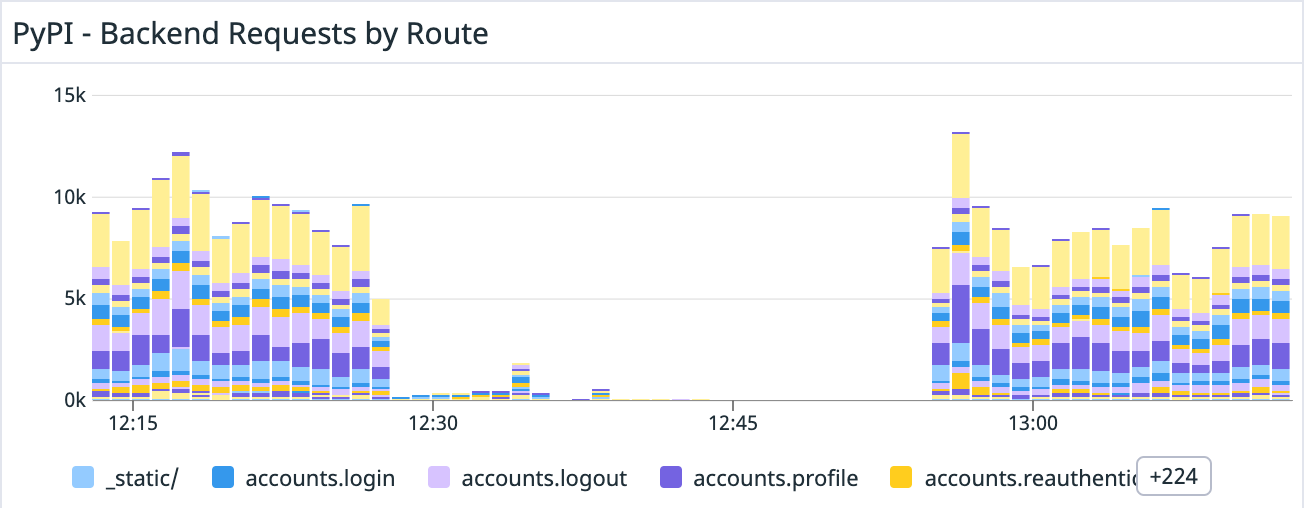

At 16:19 UTC, PyPI admins deployed the second change which added a column for the normalized project name. This included a large migration which unexpectedly locked PyPI’s database for a significant amount of time, resulting in PyPI backends being unable to create new database connections, becoming unhealthy, and causing responses to fail.

At 16:45 UTC, the migration finished and PyPI backends began to recover, however PyPI still continued to intermittently return errors and did not become fully healthy, which was not explicable based on the previous mitigations.

After investigation, PyPI admins determined that workers were crashing due to exceeding their allotted memory usage. Admins determined that the natural increase in requests after having an outage resulted in a large amount of memory being consumed by the new JSON serializer, which had higher memory footprint than the previous JSON serializer.

At 17:41 UTC, PyPI admins deployed a change reverting the new JSON serializer back to the original serializer, and backends eventually returned to healthy status.

Impact

As a result of the task queue becoming increasingly large, user-facing tasks such as CDN purges and emails became more and more delayed. Delayed CDN purges meant that the PEP-503 ‘simple’ API was not getting updated and installers were unable to become aware of newly-published packages or new versions of existing packages. Additionally, the web frontend was not displaying new versions or new packages. Users attempting to create accounts and verify email addresses were unable to do so as well.

At the peak, the task queue had nearly 50K pending tasks, and the oldest task in the queue was more than 14 hours old, whereas this queue normally has less than 100 pending tasks on average and tasks are completed in less than 1 second.

As a result of the locked migration, PyPI backends became unhealthy and served 500-level errors to users, preventing much of the web traffic, API traffic and uploads from succeeding for approximately 40 minutes.

As a result of the locked migration, PyPI backends became unhealthy and served 500-level errors to users, preventing much of the web traffic, API traffic and uploads from succeeding for approximately 40 minutes.

As a result of the increased memory usage due to the new JSON serializer, the PyPI backends did not fully recover from the prior outage and continued to return intermittent errors as pods consumed their available memory and were terminated.

As a result of the increased memory usage due to the new JSON serializer, the PyPI backends did not fully recover from the prior outage and continued to return intermittent errors as pods consumed their available memory and were terminated.

During the entire process, PyPI deployments were delayed due to their dependence on a successful run of a shared CI pipeline which had a significant backlog at the time changes were attempting to be introduced. This impacted the delivery times for changes to mitigate the issues.

Future work

During the outage, it was identified that the service PyPI uses for alerting and monitoring was insufficiently configured to notify the team of an excessively large task queue, which would have given administrators early warning that the 2FA metrics task was failing to complete and that delays were increasing. Additionally, the monitoring system lacked alerts for high CPU utilization in the database which was indicative of poorly performing queries. Alerts for both of these have already been added.

When PyPI admins re-enable the 2FA metrics task, it will be redesigned to speed up queries by denormalizing counts rather than naively counting rows.

In order to mitigate delays in PyPI’s CI pipeline, PyPI admins will migrate PyPI’s source repository to a separate organization, in order to ensure that the CI queue remains small and changes can be quickly deployed.

In order to prevent long-running migrations from locking the database for extended periods of time, PyPI’s migration runner will be modified to make migrations safer, including adding timeouts after a certain short period of time rather than block for an arbitrarily long time.

In order to mitigate complications when attempting to recover from an outage, PyPI admins will implement a policy to ensure that changes which are not directly related to the recovery efforts will not be merged until the outage has been resolved.